Government legacy systems hold decades of institutional knowledge – eligibility rules, policy interpretations, edge cases learned the hard way. When agencies modernize these systems, the typical approach is to translate old software code into new software code. But this typical approach misses something fundamental – the knowledge embedded in these legacy systems is more valuable than the code itself.

SpecOps is a methodology I’ve been developing that flips the typical approach to legacy system modernization. Instead of using AI tools to convert, say, COBOL code into Java code, SpecOps uses AI to extract institutional knowledge from legacy code into plain-language specifications that domain experts can actually verify. The specification becomes the source of truth and guides spec-driven development of modern systems – update the spec first, then use the spec to update the code.

One way to think about it is like GitOps for system behavior – version-controlled specifications govern all implementations, creating an audit trail and enabling proper oversight of all changes.

Testing the approach with IRS Direct File

To try and flesh this approach out more fully, I built a demonstration using the IRS Direct File project – the free tax filing system that launched in 2024, and which is available on GitHub. It’s not “legacy” per se, but it is an ideal test case for several reasons – it has complex business logic interpreting the Internal Revenue Code, a multi-language codebase (TypeScript, Scala, Java), and implements a set of rules that tax policy experts can verify.

To support this demo, I created a reusable set of AI instructions (i.e., “skills” files) for analyzing tax system code:

- Tax Logic Comprehension — the foundation skill for understanding IRC references and tax calculations

- Standard Deduction Calculation — extracting standard vs. itemized deduction logic

- Dependent Qualification Rules — capturing the tests for qualifying children and relatives

- Scala Fact Graph Analysis — understanding the declarative knowledge graph structures

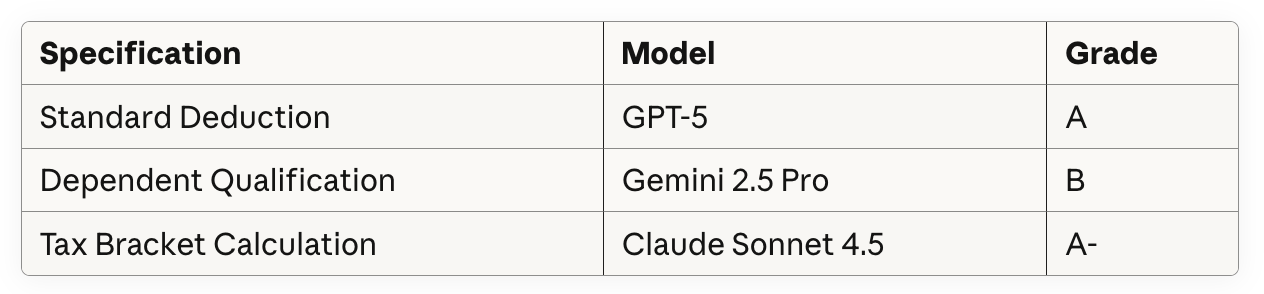

To run the demo, I pointed three different AI models (GPT-5, Gemini 2.5 Pro, and Claude Sonnet 4.5) at actual code samples from Direct File GitHub repo and asked them to generate specifications.

Results

Here are the results of my first attempt at running this demo.

All three models successfully extracted business logic into plain language suitable for domain expert review. A tax policy analyst could look at the generated specs and say “yes, that’s correct” or “no, you’re missing the residency requirement” – this is something they could probably not do (certainly not as easily) staring at raw software code.

Notably, these results came from single prompts without iteration. The skills I put together worked across different AI vendors, demonstrating the portability of the SpecOps approach.

Why this is important for government agencies

An important point that I want to emphasize about the SpecOps approach is that if can be used if there are immediate plans for a legacy system modernization, or if one is still several years out. SpecOps is designed to help aggregate and document knowledge about important government systems – there’s never a bad time to do that work.

Agencies can begin extracting specifications from legacy systems today, while institutional knowledge still exists and subject matter experts are still available. When modernization eventually happens – whether in two years or ten – agencies will have:

- Verified documentation for how systems actually behave

- Durable, version-controlled specifications that outlast any particular technology stack

- A foundation that makes future modernization faster, less risky, and less expensive

The alternative is waiting until an agency is forced to modernize, scrambling to reverse-engineer systems after the people who understood them have potentially retired.

Areas for further exploration

This demo also opens several questions worth investigating further:

- Verification at scale: Can policy experts efficiently review AI-generated specs? Initial feedback suggests yes, but more testing is definitely needed.

- Failure modes: The relatively low grade for Dependent Qualification indicates some room for improvement – what can be improved the generate a more highly rated system spec? A different model? A refined skill file? A better prompt (or prompts)?

- Skill refinement: The demo seemed to work pretty well on the first attempt. How much different can the resulting spec files be with iterative prompting?

The demo repository for this effort is public and designed for replication. I’d welcome others testing this approach with different AI models, different code samples, or different domains entirely. I hope others become as excited about the potential for this approach as I am.

Get involved

- SpecOps Methodology: https://spec-ops.ai

- Demo Repository: https://github.com/spec-ops-method/spec-ops-demo

- SpecOps Discussion: https://github.com/spec-ops-method/spec-ops/discussions

If you work in government technology, tax policy, or legacy modernization, I’d especially value your perspective on whether the generated specifications seem genuinely reviewable by domain experts. That’s the core claim that makes SpecOps viable.

The code for all government systems will eventually be replaced. The important question for those of us that work on and with those system is whether the knowledge of how they are supposed to work survives that transition.

Leave a comment